一种具有O(1)复杂度的LFU算法实现(java 实现)

创始人

2025-05-29 13:59:12

0次

最近在做一些数据缓存方面的工作。研究了LRU算法和LFU算法。

- LRU有成熟的O(1)复杂度算法:参考:https://juejin.cn/post/7055600927671058469

- LFU最佳实现方法是O(log n)的。但项目中要求算法高效,所以学习了O(1)复杂度的算法实现:参考: https://tech.ipalfish.com/blog/2020/03/25/lfu/

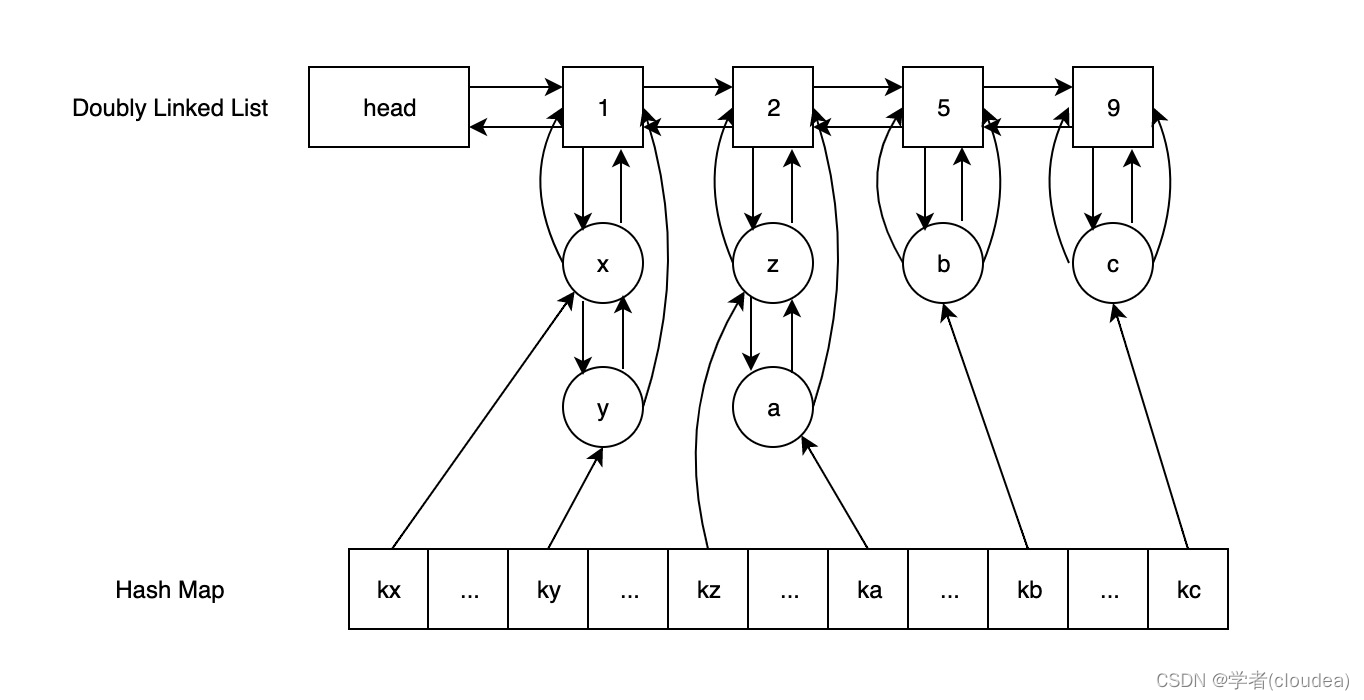

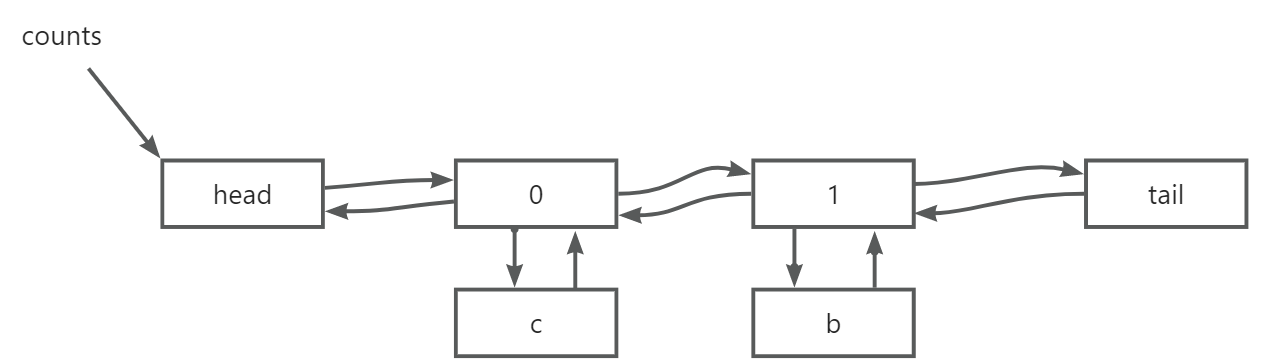

算法本质上是维护一个如图的数据结构:

- 一个HashMap

- 一个双向链表(链表元素又是一个双向子链表,具有相同访问次数的数据会放在相同的链表中)。

此时聪明的你应该想到如何实现了!下面以一个例子说明其原理。为了画图方便。省略了两类指针:

- HashMap指向子链表元素的指针

- 双向子链表元素指向双向链表节点的指针

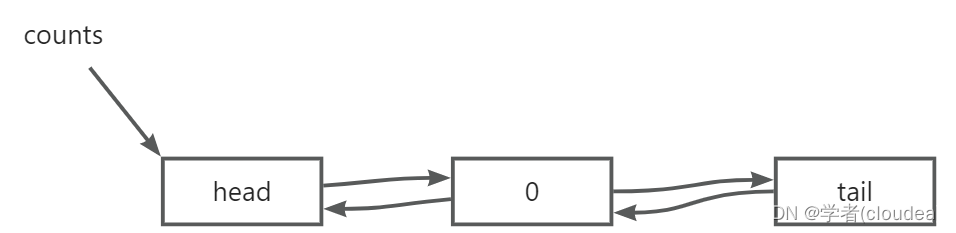

在本文的实现中,初始时只有一个频次为0的链表:

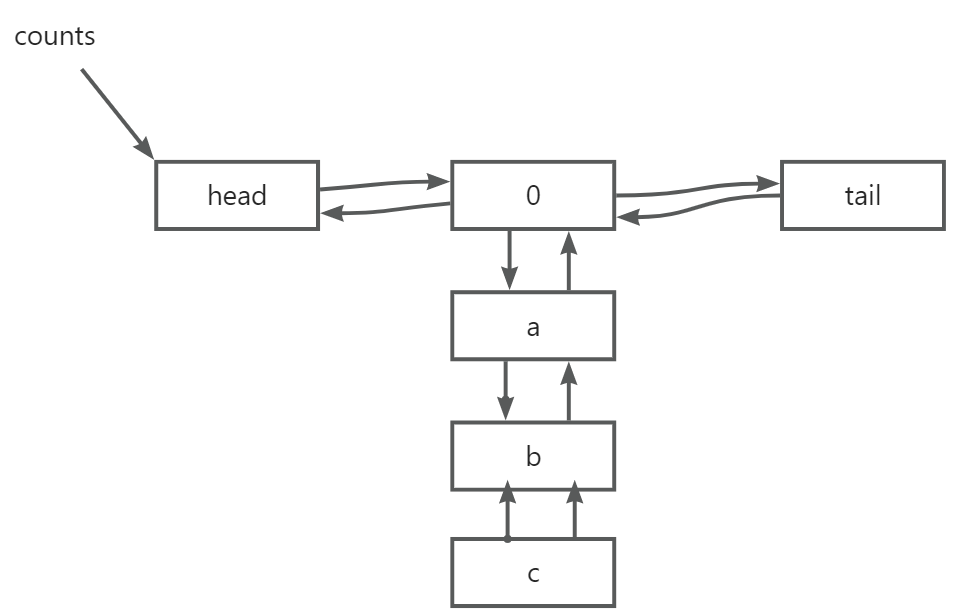

当加入值时,都默认放在这个频次为0的链表中。比如添加a, b, c后。

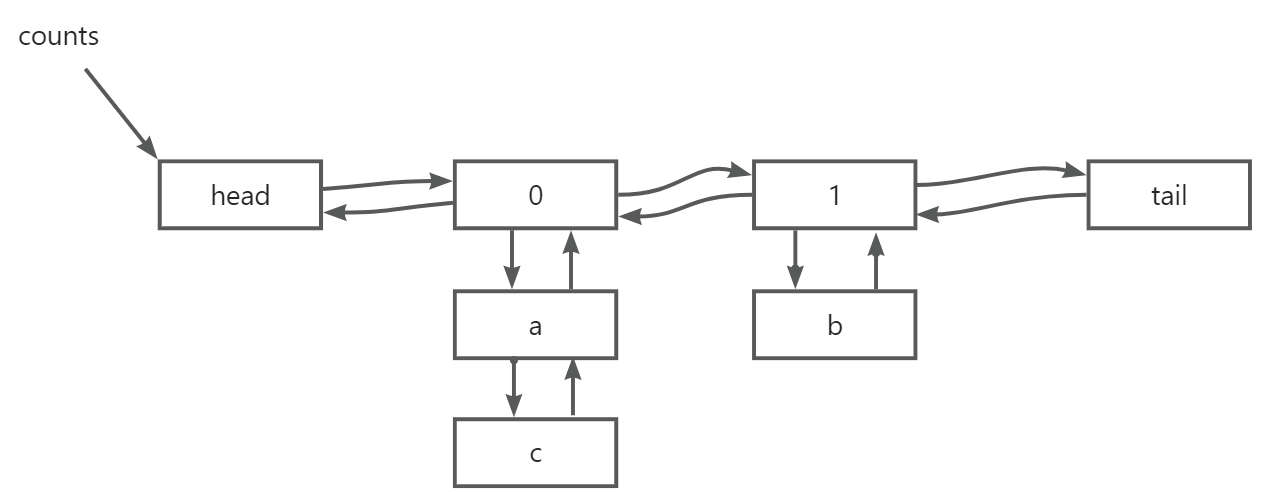

当获取值时,会把它的频次加1,以b为例,即会把b移动到一个新的链表。

当缓存不足时,将优先淘汰访问频次最小的。可以从head开始查找,将找到的第一个从链表中删除即可。即会把a删除,删除后如图。

无论是加入、获取、淘汰,操作的复杂度都是O(1)的。

package com.iscas.intervention.data.cache;import java.util.HashMap;/*** O(1) 的LFU实现* @author cloudea* @date 2023/03/17*/

public class LFU {/*** 保存数据的双向链表结点*/public static class LFUListNode {public String key;public Object value;public LFUListNode last;public LFUListNode next;public LFUDoubleListNode master; // 指向所属的频次节点public LFUListNode(String key, Object value) {this.key = key;this.value = value;}}/*** 保存频次的双向链表节点*/public static class LFUDoubleListNode {public int count; // 频次public LFUListNode head ;public LFUListNode tail;public LFUDoubleListNode last;public LFUDoubleListNode next;public LFUDoubleListNode (int count){this.count = count;// 初始化哨兵this.head = new LFUListNode(null, null);this.tail = new LFUListNode(null, null);this.head.next = this.tail;this.tail.last = this.head;}}/*** 频次双向链表*/public static class LFUDoubleList {public LFUDoubleListNode head;public LFUDoubleListNode tail;public LFUDoubleList(){this.head = new LFUDoubleListNode(0);this.tail = new LFUDoubleListNode(0);this.head.next = this.tail;this.tail.last = this.head;}}private HashMap caches = new HashMap<>();private LFUDoubleList counts = new LFUDoubleList();public LFU(){// 初始化访问频次为0的结点LFUDoubleListNode lfuDoubleListNode = new LFUDoubleListNode(0);lfuDoubleListNode.last = counts.head;lfuDoubleListNode.next = counts.tail;counts.head.next = lfuDoubleListNode;counts.tail.last = lfuDoubleListNode;}public void set(String key, Object value){if(caches.containsKey(key)) {// 如果存在,则更新一下value即可caches.get(key).value = value;}else{// 否则,需要放入链表中(放入频率为0的链表)LFUListNode node = new LFUListNode(key, value);node.master = counts.head.next;node.last = node.master.tail.last;node.next = node.master.tail;node.last.next = node;node.next.last = node;caches.put(key, node);}}public Object get(String key){if(caches.containsKey(key)){// 如果存在,直接返回,然后更新频次 (频次加1)LFUListNode node = caches.get(key);LFUDoubleListNode nextMaster = node.master.next;if(nextMaster.count != node.master.count + 1) {// 新建一个master节点LFUDoubleListNode A = node.master;LFUDoubleListNode B = node.master.next;LFUDoubleListNode n = new LFUDoubleListNode(node.master.count + 1);n.last = A;n.next = B;A.next = n;B.last = n;nextMaster = n;}// 把结点从当前master删除node.last.next = node.next;node.next.last = node.last;// 把结点加入到下一个masternode.last = nextMaster.tail.last;node.next = nextMaster.tail;node.last.next = node;node.next.last = node;node.master = nextMaster;// 返回节点值return node.value;}return null;}/*** 删除频次最小的元素*/public Object remove(){if(size() != 0){for(var i = counts.head.next; i != counts.tail; i = i.next){for(var j = i.head.next; j != i.tail; j = j.next){// 删除当前元素j.last.next = j.next;j.next.last = j.last;// 删除当前master(如果没有结点的话)if(j.master.head.next == j.master.tail){if(j.master.count != 0){j.master.last.next = j.master.next;j.master.next.last = j.master.last;}}// 从hashmap中删除caches.remove(j.key);return j.value;}}}return null;}public int size(){return caches.size();}

} 相关内容

热门资讯

山西太钢不锈钢股份有限公司 2...

来源:证券日报 证券代码:000825 证券简称:太钢不锈 公告编号:2026-001 本公司及董...

把自己的银行贷款出借给别人,有...

新京报讯(记者张静姝 通讯员邸越洋)因贷款出借后未被归还,原告牛女士将被告杨甲、杨乙诉至法院,要求二...

金价暴跌,刚买的金饰能退吗?有...

黄金价格大跌,多品牌设置退货手续费。 在过去两三天,现货黄金价格经历了“过山车”般的行情,受金价下跌...

预计赚超2500万!“豆腐大王...

图片来源:图虫创意 在经历了一年亏损后,“豆腐大王”祖名股份(003030.SZ)成功实现扭亏为盈。...

特朗普提名“自己人”沃什执掌美...

据新华社报道,当地时间1月30日,美国总统特朗普通过社交媒体宣布,提名美国联邦储备委员会前理事凯文·...

爱芯元智将上市:连年大额亏损,...

撰稿|多客 来源|贝多商业&贝多财经 1月30日,爱芯元智半导体股份有限公司(下称“爱芯元智”,HK...

一夜之间,10只A股拉响警报:...

【导读】深康佳A等10家公司昨夜拉响退市警报 中国基金报记者 夏天 1月30日晚间,A股市场迎来一波...

谁在操控淳厚基金?左季庆为谁趟...

2026年1月6日,证监会一纸批复核准上海长宁国有资产经营投资有限公司(下称“长宁国资”)成为淳厚基...

工商银行党委副书记、行长刘珺会...

人民财讯1月31日电,1月29日,工商银行党委副书记、行长刘珺会见来访的上海电气集团党委书记、董事长...

布米普特拉北京投资基金管理有限...

从亚马逊到联合包裹,一场席卷美国企业的“瘦身”行动正在持续。多家企业近期承认,近年来的扩张步伐迈得过...

酒价内参1月31日价格发布 飞...

来源:酒业内参 新浪财经“酒价内参”过去24小时收集的数据显示,中国白酒市场十大单品的终端零售均价在...

筹码集中的绩优滞涨热门赛道股出...

2025年以来,在受多重因素的刺激下,科技、航天、基础化工等热门赛道中走出轮番上涨的结构性行情,其中...

2026年A股上市公司退市潮开...

来源:界面新闻 界面新闻记者 赵阳戈 随着2026年序幕拉开,A股市场新一轮“出清”即将上演。...

雷军官宣新直播:走进小米汽车工...

【太平洋科技快讯】1 月 31 日消息,小米创办人、董事长兼 CEO 雷军在社交媒体发文宣布,将于 ...

现货黄金直线跳水,跌破5200...

新闻荐读 1月29日晚,现货黄金白银快速走低,回吐盘中全部涨幅。23:15左右,现货黄金跌破5300...

加拿大拟与多国联合设立国防银行

新华社北京1月31日电 加拿大财政部长商鹏飞1月30日说,加拿大将在未来数月与国际伙伴密切合作,推进...

马斯克大消息!SpaceX申请...

据券商中国,美东时间1月30日,路透社报道,据两位知情人士透露,马斯克旗下SpaceX公司2025年...

澳网:雷巴金娜2-1萨巴伦卡女...

北京时间1月31日,2026赛季网球大满贯澳大利亚公开赛继续进行,在女单决赛中,5号种子雷巴金娜6-...

春节前白酒促销热:“扫码抽黄金...

春节临近,白酒市场再现价格异动。 近日,飞天茅台批价拉升,有酒商直言“年前要冲2000元关口”,引发...